Nanometers

I first planned to use white LEDs to illuminate the film. But now I think the ideal source is an RGB LED. Here’s why…

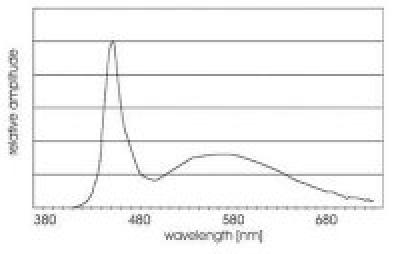

White LEDs are actually blue LEDs with a patch of phosphor inserted that absorbs some of the blue light and re-emits green-red light to produce a visible white color. The resulting spectral distribution of this source looks like this:

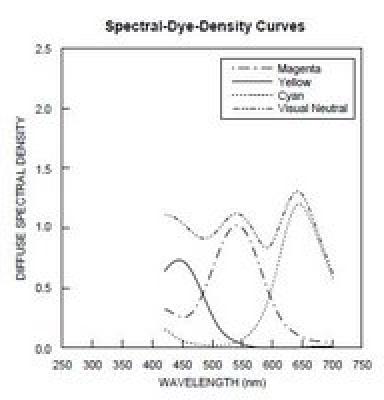

In a telecine machine, we are trying to measure the density of 3 layers of colored dyes in the processed film. For Kodachrome movie film, the spectral densities of these dyes look like this:

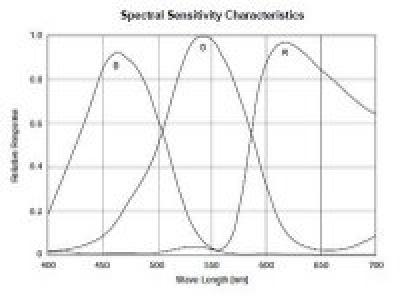

The CCD imager that I am using to measure the density of these dyes has a color filter array (CFA) made of colored dyes. The spectral transmittance of these dyes, combined with the spectral sensitivity of the silicon sensor, results in a sensitivity of the device that looks like this:

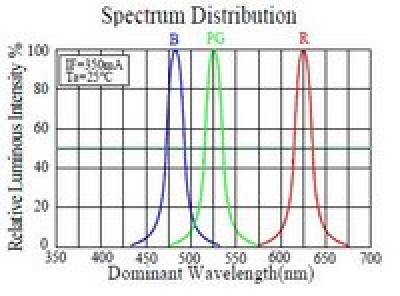

The peak response wavelengths of the red, green and blue pixels in the CCD almost match the peak blocking wavelengths of the red, green and blue dyes in the film as shown above, making the CCD a fine device for measuring the color on the film. The white LED spectrum doesn’t match so well, but look at the spectral output of the RGB LED…

Now we have a narrow band illuminant that matches the film dyes and the CCD pixels very nicely (though it would be nice to have a bluer blue). Aside from the obvious elegance on paper, this allows the illuminant intensity to be adjusted so that the red, green and blue pixels of the CCD will all saturate at the same exposure setting. Or, more important, it means that an exposure setting can be chosen that will nearly saturate all three colors, meaning we are using the full dynamic range of the CCD, which is, after all, somewhat limited.

Absolutely – this is the correct way to go.

the subtly is that the overlap of the sensitivity of the CCD sensor response (with its obtical filters) and the overlap of the dye colours between the different colour channels causes so called interimage intercolour effects. These disappear if you use a three line spectrum light source.

LEDs are not quite line spectra – mainly doe to the mix and impurities in the phosphors used, but they will give much better results in this respect than broad spectrum light sources.

This is most obvious in faded film which is quite easy to correct if you use LEDs as the faded channels are obvious and easy to adjust. if you use broad spectrum light the problems cross contaminate other channels.

-Steve

Comment by steve — November 17, 2017 @ 12:27 pm

Most of what you said about color mutch is not exact.

RGB spectrum of LED and or sensor DO NOT match exactly on Kodak(or any else) film stock color characteristic. There are many research by prof. Barbara Fluger and a polish guy (which name I forget,but company is FixaFilm) that prove that you cannot scan ALL available colors with just current RGb sensors. For that reason we developped full color gamut scanning head that capture ALL colors available in film stock independent of which emulsion,dyes and which technology is made. More on that project – on fullcolorgamut.xyz

Comment by Radoslav — January 12, 2018 @ 8:03 pm

Hi Radoslav,

Most intriguing.

I can find no reference to Barbara Fluger, perhaps my google Fu is not up to it.

I agree, the colours of LED illumination and the colour sensitivities of the CCD sensors

and their Bayer masks do not exactly match those of Kodak, Fuji or any other film.

However this does not matter, we are trying to measure the density of the Red , Green and Blue dyes, whilst minimising the effect of the Red dye on the Blue and Green channels etc – the intercolour interlayer effects.

Professional telecines use emulsion specific intercolour cancellation matrices to compensate for this, these work very well. However generating intercolour matrices needs access to precision calibrated test films (Kodak TAF for example). 35mm films are still available on ebay but are hard to find for many 16mm films and let alone 8mm and super 8 films.

If you where to find a TAF, for best results you would have to find one of a similar age and which had been stored in similar conditions to your archive film…

Using a (near) line spectra light source achieves almost the same thing; we cannot compensate for the colour sensitivity intercolour effects only the dye effects, but this gets us most of the improvement that the matrices give.

Its possible that the film may may some sensitivity outside of the main RGB lobes (non rectangular passband) though this will be very low sensitivity. However this can only introduce increased density in the main R, G and B dyes – there are no IR or UV dyes in movie films.

You can also get wide gamut light by illuminating the film with broad spectrum light, some of which is passed by the imperfect (again, non rectangular) colour passband of the film dyes.

The the limitation of the *real* colour gamut of film is the colour sensitivities of the film. You can produce other colours but these never existed in the original scene, they are only artefacts of the of the films imperfect colour response.

I still assert that line spectrum light sources are the most accurate way to measure the colour content of film. They also have the advantage of making later digital colour correction much easier by removing the majority of the intercolour effects.

-Steve

Comment by steve — March 10, 2018 @ 7:39 pm